Data Modeling Techniques Explained

Share

Data fuels the modern organization, hence the business world’s acceptance that “data is the new oil.” But much like crude oil, raw data must undergo transformation. A business can only reap the true value of data when it is organized and interrelated in a meaningful way. This is the task of data modeling.

Keep reading for an introduction to two dominant data models and their modeling techniques.

What is Data Modeling?

The primary task of data modeling is to organize data in such a way as to allow businesses to extract maximum value from it.

We have a tacit understanding of entities like “customer” and “product,” as well as actions like “make a purchase” and “request a refund.” Data modeling allows us to use data to explicitly define such concepts and their relationships. On a simplistic level, we’d want to define the following:

- What kind of information and data types do we store in the “Customers” table?

- Do we record the customer’s country of origin in the “Customers” table? Or do we create a “Country” table, save all the countries there, and point the “Customers” table’s “Address” column to the relevant row of the “Country” table?

- When storing the customer’s gender, do we stole the full strings (“Male,” “Female,” and “Non-binary) or do we store these as single characters? Alternatively, if a customer chose the “Prefer not to say” option, do we store this as a NULL value or as a separate categorical variable?

While there are many data modeling solutions, some may be more appropriate than others depending on how and why a business plans to use its data. Data modeling thus seeks to create information systems that are efficient, accurate, and aligned with business requirements.

Since the inception of databases in the 1960s, many database models have been invented, but only two have kept up with commercial demands: first, the relational model, and, more recently, the dimensional model. As we’ll see below, the two models differ significantly in how they organize data, making one model better suited for specific applications than the other.

Does this apply only to relational databases? Not really. This concept is not primarily about the type of the database or the technology used bur rather about the data itself.

The Relational Model

A relational model describes data and the relationships between different data records. Relational models are used to build relational databases that organize data in tables. These tables can be linked together and they typically represent entities like “Employee” or “Store.” A link between two tables represents the relationship between the entities, such as “is employed at.”

Transactional system: The most common relational database

Relational modeling is commonly used for building transactional databases. These databases constantly undergo small updates that are made by transactions, hence their name. Transactions are processes that consist of smaller subordinate processes. For a transaction to succeed, all of its subprocesses need to succeed as well. But if one of the subprocesses fails, the entire transaction terminates.

For example, transferring money from one account to another can entail two subprocesses: (i) debiting the source account, and (ii) crediting the target account. For the transaction to complete, both of the steps need to be recorded in the database. If it were not for this requirement, accounts could lose or gain money out of nowhere.

Activities like online payments, airline ticket reservations, and hotel bookings all employ transaction processing. These business processes require a database with the ability to perform create, read, update, and delete (CRUD) operations on it constantly and in near real-time. The relational model has been the perfect choice for such systems.

In addition to maintaining the integrity of financial transactions, transaction processing systems also play a crucial role in ensuring data consistency in various other domains. In e-commerce, for instance, when a customer places an order, it triggers a series of actions such as inventory deduction, order confirmation, and shipment processing. These steps must be executed atomically to prevent issues like overselling products or failing to fulfill orders. Transaction processing systems are the backbone of such operations, guaranteeing that each step is completed successfully or none at all, thereby upholding the reliability and trustworthiness of online businesses.

Normalization

Normalization is what enables the relational model to support transactional systems.

It is an incremental process that consists of sequences of steps (called normal forms) that are applied to a relational database one after another. Normalization can be seen as a process of refinement that is iteratively applied to each table in a database. The higher the degree of the normal form, the higher the extent of refinement.

Applying a normal form to a table decomposes it into more tables. These new tables consist of the original table’s duplicate and redundant data. As we apply normal forms to the database, each normal form makes the database increasingly more granular (i.e. the state of having a number of tables).

Below is an illustration of how a highly normalized relational database might look. Note how there are many interconnected tables. A lower degree of normalization would make the database consist of fewer tables and relationships, whereas further normalizing the database would make it even more granular.

Normalization introduces new tables and makes databases more complex. However, it also removes redundancies, which is critical to improving transactional systems. Recall that transactional systems require a database that can support constant minor updates.

Let’s say we want to access a transactional database to update a customer’s address. A database in the third normal form, which is the standard normalization degree for relational schemas a nd transactional systems, would require us to update the address at only one place: we’d look up the “Customers” table, find the row that matches the customer’s unique ID, and update the value in the “Address” column.

In contrast, if our database were denormalized and we stored duplicates of the customer’s address in the “Purchases” table, we would have to update the value of the “Address” column separately for each record of purchase for that particular customer. This not only introduces more work, which can lead to delays in the transactional system, but it’s also error-prone and can cause anomalies in the database.

Relational databases are thus the ideal solution for building transactional systems. The normalized nature of the relational model minimizes the amount of work required to perform many small CRUD operations and ensures that data is always consistent and accurate.

The Dimensional Model

Retrieving a record from a transactional database requires you to go through many tables and merge them. This is fine for accessing a single record and making small changes as in the case of processing transactions, but it’s too complex and time-consuming for analysis. After all, analysts work on large amounts of data. Enter the dimensional model, a modeling technique that optimizes for mass reads.

Dimensional modeling is the predominant model of data warehouses. The job of a data warehouse is to make data easily and quickly available to facilitate analysis, reporting, and business intelligence.

This is not to say that transactional systems cannot be used for analysis—they can, and they often are. Transactional systems are the first databases that companies build. And when it’s time to start doing business intelligence, it’s often easier to use a familiar database than to build a new specialized one. However, as data starts to accumulate, companies without a data warehouse inevitably hit a wall. Transactional systems need to operate in real-time, but without a data warehouse in place, they end up competing for power whenever someone decides to fetch data for analysis.

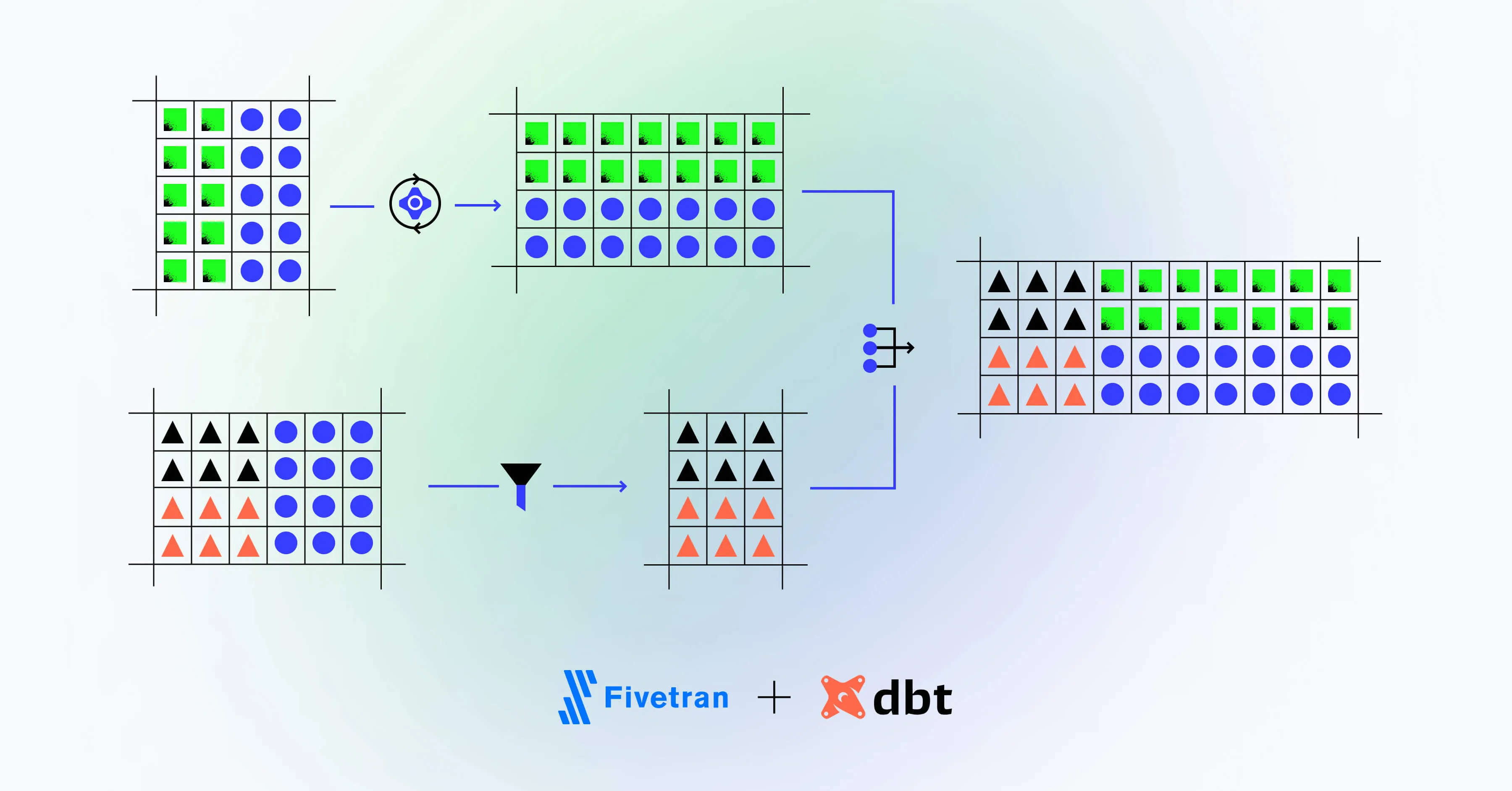

So what does the dimensional model look like? Imagine the opposite of the highly normalized relational model pictured above. If we compressed all of the relational model’s tables together, we would have a single table that looks like a big flat spreadsheet with wide rows. Now, we would normalize this table so that instead of having just a single table, we would have several. However, unlike the relational model where all tables are interrelated, in the dimensional model all tables link back only to the main table. This is known as the star schema.

Star schema

The star schema is one of the simplest dimensional models. It consists of two types of tables: one for representing “facts” and another for “dimensions.” The “facts” table stores non-aggregated, granular data pertaining to transactions (mainly numerical data), whereas “dimension” tables store categorical values that further describe the facts. As a rule, the star data model consists of one central table and several dimensions that are at most one relation away from the main table.

When visualized, the schema resembles the shape of a star, hence its name:

If you compare this illustration to the one of a relational model, you can instantly notice that the star schema’s organization is significantly simpler. This is due to the star schema’s denormalized nature. Denormalization makes databases less granular, at the cost of having data that would have otherwise been saved in a separate table now being duplicated across the existing tables. This would be a problem for a transactional system, but luckily it doesn’t matter for a data warehouse. When it comes to data warehouses, performance is more important than a perfectly granular design.

Recall that the main purpose of data warehouses is to quickly retrieve large amounts of data. Joining tables is a computationally expensive process, so querying a highly normalized relational database consisting of a hundred tables would consequently require a hundred table joins. If you were to query a database consisting of millions of rows, that many joins would make the query take a long time to execute.

By keeping the number of the tables to a handful, databases in the star schema can execute queries much faster than their relational counterparts. This makes the star schema ideal for analytics and executing queries that fetch many rows. Additionally, by storing data in a flat manner instead of spreading it across many tables, the star schema makes it easier to intuitively understand the data warehouse’s structure.

Snowflake schema

Snowflake schema is a dimensional model that visually resembles the structure of a snowflake. (Do not confuse with Snowflake, a cloud data warehouse provider!)

As you can see from the illustration, a snowflake schema is just a star schema with normalization applied to the dimension tables; we call these “subdimensions.” Its normalized structure provides some advantages such as fewer redundancies and therefore lower maintenance compared to the star schema. Fewer redundancies and duplicates also contribute to the snowflake schema’s lower memory requirements.

However, the snowflake schema’s increased granularity brings back our original problem of slow queries caused by too many joins. This might not be obvious in smaller data warehouses, but it can become a problem when the facts table grows to millions or billions of rows. If this happens, the solution is often to revert back to the star schema’s design.

Mighty Digital: Your Data Infrastructure Experts

Data modeling is one of the first and most important steps of building data infrastructure. In this article, we covered two dominant data models—the relational and the dimensional model—and explained how they can be used to support different types of business processes.

Careful planning on data organization goes a long way towards establishing future-proof data infrastructure. When done right, data modeling greatly reduces the complexity of managing your data. By allowing you to have a complete review of all of your company’s data, data modeling also mitigates the risk of not complying with data regulations

If you’re unsure how to proceed with building your data infrastructure, the experts at Mighty Digital are here to help you plan and implement every step of it. Reach out today!